As a bit of housekeeping, the reader should know I plan to address two concepts: The Principle of Least Privilege and Biometrics. Additionally, readers should be aware I covered the topics of Public Key Encryption and the Diffie-Hellman Key Exchange in my blog on Cryptography as they will be used in conjunction with both of these topics.

Introduction

How many passwords do you have? Let me rephrase that–How many unique passwords do you have? Do you have a journal that you use to keep track of the passwords and a service it’s attached to? As someone who is passionate about security, I have maybe four unique passwords. That should tell you something either about my laziness or about the issues surrounding account verification. In this blog, I’ll address the concept that has created this predicament, called the Principle of Least Privilege, and talk about why this problem exists as a necessity.

Tangentially, we saw in the previous blog post a means to verify identity, published by Diffie-Hellman, by way of swapping private locks. But are there other ways? From the user’s perspective, we have things like passwords, pins, or other knowledge-based verification to check identity. Even further, newer technology is including things like finger-print readers as a primary biometric identifier. We’ll explore this idea more, but recognize that this concept is important in solving our password issue. In order to minimize the number of passwords one person memorizes, we need to develop a method that is more universal.

Principle of Least Privilege

Let’s return to this idea of the Principle of Least Privilege, or sometimes called Separation of Duties. Going forward, we need to operate under the some assumptions–such as using a system that requires communication–concepts that are referred to as formal agreements. Later, I will provide a more concrete example that operates with this assumption and will also demonstrate this idea in terms readers should be familiar with. Additionally, readers can continue with Smart’s Intro to Cryptography (page ~375) and read more about formal agreements.

I’d like to formalize this concept we’re talking about with a definition and then explain it using less formal terms. In Computer Science, the Principle of Least Privilege is the idea that at any point in a program, every module (process, user, or program) only has the authority and access to the information it needs (for a legitimate purpose) and no more. What this means for us is that security-prone tasks should be restricted in scope. Think about administrator logins being required for file modifications on your computer. Here, the defined privilege is that only administrators can alter files, and those below them in the hierarchy, i.e. regular users, should not be able to.

Moving on from our previous examples, there is something that the average reader will have almost certainly experienced that demonstrates the concept of Least Privilege. That example is a bank. Consider the scenario that two people wish to wire money between each other (I know wires didn’t exist back then, but bare with me). They both must be able to identify themselves, and indicate the intended recipient. I will use the example of Jaime and Cersei Lannister transferring money through the Iron Bank (of course to celebrate the return of Game of Thrones). The interaction may go something like:

- Jaime appears in person before the Iron Bank and presents an agreed upon form of i.d. (in the real world, this is something like your social security number).

- He tells the bank, “I would like to transfer $200 to Cersei Lannister”, who is also a member at the bank.

- Cersei appears before the bank to receive the transfer, but in order to verify the money is given to the correct person, she also must be identified in the same manner as Jaime.

- After verification, both parties leave successful.

Such a simple transaction! In this example, neither Jaime nor Cersei knew each other’s verification, but the bank knew both. The idea is that, so long as the central authority can remain secure (perhaps with symmetric key encryption?), there is only a small chance of information being compromised. Of course, in theory this is good, but in practice, this is very hard to do correctly (“How does the authority remain secure?”).

Let’s extend this example of a bank further. The teller who is serving this wire transfer is almost assuredly a different person than one who would release loans. This is for the same reason as verification–if the teller is compromised, there may be an invalid wire transfer, but the compromised teller cannot also issue a loan because loans are not part of their duty. Additionally, this provides an opportunity to vet the people who know information so that the more trustworthy the person is, the more they’re allowed to know. Think what it means to have top secret security clearance–those without clearance are unable to see the information. We’ve effectively separated the duties of people on a basis that allows us to verify the information is harder to compromise.

I had hinted at this in my previous blog on cryptography, but we see the principle of least privilege in action every time a website gets served up that says “https://..” The ‘s’ on the end indicates “secure”, which means that the website is coming from a source that is verified as the true source. This follows a protocol called SSL or Secure Sockets Layer (also TLS) and is a means of establishing credibility through a central certificate authority. Let me describe the process, because it is simple and will give you an example that you see everyday (even while reading this blog–check the url).

When you request a webpage from a server, there is an exchange of certificates, or digital signatures. The certificates generally utilize RSA encryption (remember, public-key crypto) as the certificate. Now, before any other information is sent, the certificate each party received is checked against a central SSL Certificate authority–essentially an entity with implicit trust. Both parties make this query and expect a response of either, “Yes, they are who they say they are” or “No, don’t trust this source.” It’s only until both identities are verified that information can then be sent along the connection.

In this example, we’ve established least privilege for users (both the client and server) and the central authority. Recall our definition that something should only have the information required for its task at hand. This example follows suit by only allowing users to know the identities of the owners serving the data, and no one else until requested. Users should not have a long list of every public key out there–for one, it would be enormous, additionally how does every internet user out there simultaneously update such a list?

The avid reader may wonder how we can verify with the central authority, certainly someone could intercept the verification, or pose as the authority? Well, again cryptography comes to the rescue! I will leave it to the reader to continue with the SSL certificate verification protocol. The goal is to show you that a separation of duties have been established in order to create a system that can communicate in a secure way (in this case, using RSA).

Biometrics

Traditionally, identity verification was simpler than it is now–communication was usually done in person (verify by face) and if it was through letters, there may have been a symbol or phrase that was previously agreed upon for verification. These ideas persist to this day with things like PIN numbers, passwords, and knowledge-based questions. But how can we verify identity through something more biological (similar to looking at their face)? You might remember seeing something like iris readers or fingerprint scanners in popular movies, and for a long time, people thought these were inventions of the future with no viable implementation.

Today, we see biometric verification more and more in technology like the iPhone’s touchID, as well as other fingerprint scanners for things like laptops. Most consumer technology that uses biometrics, uses fingerprints for identification, so for the remainder of this blog, that’s what we’ll focus on. But we’ve spent so long talking about security, maybe we should question the use of biometrics as a security measure and not just science fiction.

The goal of using passwords or PINs is to create a secret which may serve as a unique verification, and it must be sufficiently difficult to guess, otherwise someone could steal it. It follows that fingerprint readers should be accurate enough to generate an image that precisely archives the print for verification and effectively acts like a password for users.

Interestingly, researchers have not found significant evidence that finger prints are unique to an individual. It’s been a washy debate, and either side could be right, however, it’s important to note that there doesn’t even exist a method to verify matching with 100% success rate–meaning there may be false negatives/positives. This might make you nervous, but hold tight because I’ll explain why this is not necessarily a bad thing.

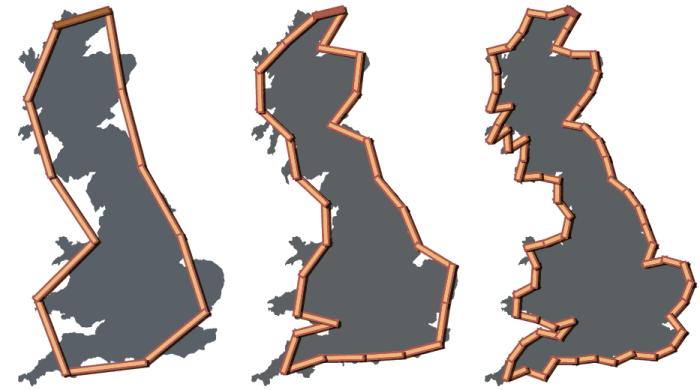

The benefit of using biometric data for identity verification–and the reason why it’s not as important that fingerprints are unique–is rooted in an idea called the Coastline Paradox. Cartography has traditionally been an important part of civilization, and since the advent of GPS, we have made more and more accurate maps. But how accurate can they get? We create maps by placing points along the coast, and measure distance between them, and we place more points to make more accurate maps. Continue this to infinity, and we have infinitely many points to measure. Would I be wrong to say we are measuring infinity? The following graphic demonstrates how we can take progressively more accurate measurements of a coastline.

Extend this idea to biometrics. Fingerprints can be viewed as a map to our fingers. They are seemingly infinite in detail. When we say two fingerprints are identical, maybe they wouldn’t be if only we could take a higher resolution image? Herein lies my argument–so long as we have technology that is taking progressively more accurate fingerprints, hence a more challenging thing to crack, then perhaps we have a secure verification system on our hands (pun intended)–a system that could be used alongside cryptography or could be used for verifying identity.

Conclusion

Apple was one of the first to successfully deploy the concept of taking progressively more intricate and accurate fingerprint readers in their touchID readers on the newer iPhones. The groundbreaking idea was to create a fingerprint reader that would learn and continuously update its internal image of the fingerprint every time a user would scan their finger. Since then, Androids in various flavors have employed similar techniques, and we have witnessed the emergence of biometrics in a wide-reaching consumer good.

To bring our two ideas together, biometrics is a means of providing that crucial identifying information required to create separation of duties. Since we are always searching for a means of creating more unique, or harder to guess identifiers, fingerprinting seems a perfect candidate.

As a small teaser, we’ll see how Apple fully utilizes biometrics and the principle of least privilege with technology in a later blog post…

Some Important Resources I Used

Strengthening Forensic Science in the United States: A Path Forward. (2009). Washington, D.C.: National Academies Press.

Applying the Principle of Least Privilege to User Accounts on Windows XP. (n.d.). Retrieved April 06, 2016, from https://technet.microsoft.com/en-us/library/bb456992.aspx

N. (2013, January 28). Mapping Monday: The Coastline Paradox. Retrieved April 06, 2016, from http://blog.education.nationalgeographic.com/2013/01/28/mapping-monday-the-coastline-paradox/

You must be logged in to post a comment.